Master's Thesis

Exploring the Impact of Large Language Models (LLMs)

on Conceptual Learning in Higher Education:

An Analysis of AI-Driven Conversational Tools (ChatGPT)

Supervisor: Eleftherios Papachristos

Norwegian University of Science and Technology (NTNU)

Master of Science (MSc) in Interaction Design

January, 2025

Grade: A

Motivation

This thesis reflects my interest in generative AI conversational tools especially LLMs like ChatGPT.

When I looked into literature, I realised a gap which became my focus for the thesis.

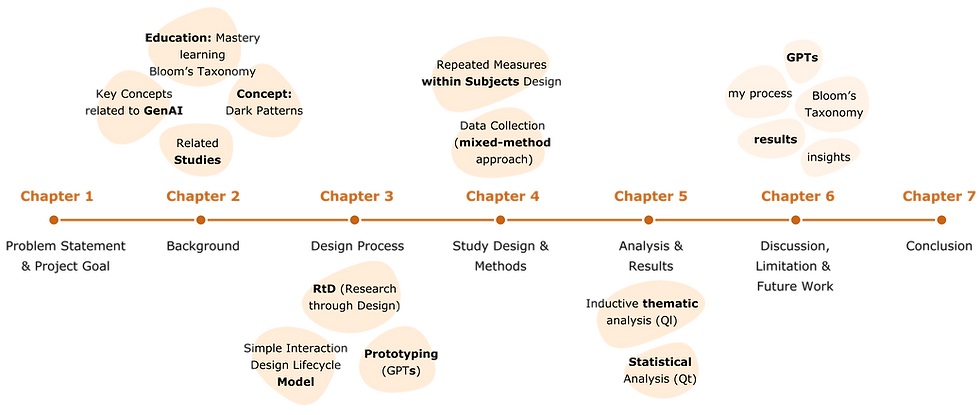

Summary

In this study, I explored the user experience and effectiveness of AI-generated conversational tools', particularly LLMs' in higher educational context. The goal was to investigate LLMs' value in facilitating the understanding of conceptual complex subjects.

Based on the evaluations, I chose ChatGPT-4 as a LLM for this project because of its advantages over other LLMs. During the design process, I discovered GPT, a custom model of ChatGPT that can be trained on a specific subject, and add this to the study as an alternative to ChatGPT-4. As for a traditional online method, I chose an official website due to trust. So, basicly this study compared these learning tools' effectiveness and user experience.

RQ1: “Do LLMs provide better means of understanding concepts, compared to traditional methods of gathering and reading information online?”

RQ2: “Do LLMs offer a better user experience than traditional methods?”

-

Participants: 38 students both bachelor’s and master’s levels, from 7 academic disciplines.

-

Learning tools' value, effectiveness and user experience were tested and compared.

-

Learning Tools Compared:

-

ChatGPT-4

-

Custom GPT (domain-specific trained version of ChatGPT)

-

An official website

-

-

Data Collection:

-

Pre- and post-study questionnaires (quantitative data)

-

Interviews (qualitative data)

-

-

Data Analysis: Statistical and thematic methods

Results

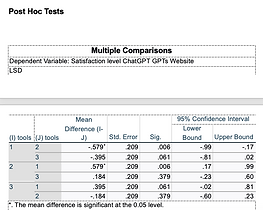

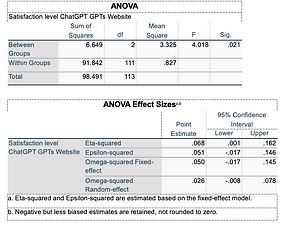

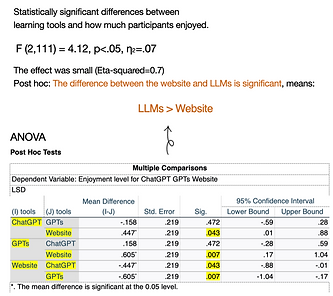

LLMs offered better UX than tradition onlinal learning methods.

In terms of "Enjoyment, Satisfaction, Increasing Motivation":

-

GPTs, ChatGPT > Website

In terms of "Trustworthiness":

-

Website > GPTs, ChatGPT

However, Custom GPTs showed potential to be trustworthy,

because its trainer was seem as a credible source.

Between all the learning tools, Custom GPTs seemed like it has more benefits and value to be used in education. Also, it was found to be more satisfactory than ChatGPT.

-

GPTs > ChatGPT

Process and details about the thesis as it follows....

1. Problem Statement

Advancements in AI-driven conversational tools—especially LLMs like ChatGPT—transformed how we access information. They brought new forms of human-machine collaboration that reshaped traditional teaching and learning.

ChatGPT reached 100 million users in just three months, and amazed the world! BUT it created mixed reactions in academia. Some institutions banned it over concerns, while others saw its potential to make learning more interactive and engaging.

Research Questions

The primary goal of this thesis is to investigate LLMs’ educational value in facilitating the understanding of conceptual subjects.

RQ1: “Do AI-based conversational tools (LLMs, such as ChatGPT) provide better means of understanding concepts* in an educational context, compared to traditional methods of gathering and reading information online?”

RQ2: “Do LLMs, such as ChatGPT offer a better user experience than traditional methods?”

2. Background

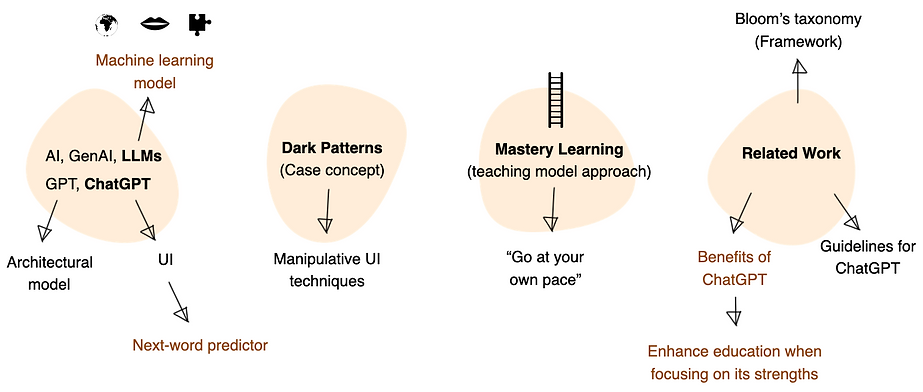

LLMs (Large Language Models): AI models trained on public data to generate human-like text by recognizing patterns. GPT: OpenAI’s architecture used to build LLMs.

ChatGPT: A user interface built on GPT that predicts text without true understanding.

Dark Patterns: Manipulative UI design tactics that blur ethical and legal lines.

Mastery Learning: A teaching model where students progress after mastering each concept.

BT (Bloom’s Taxonomy): A framework used to structure learning objectives and evaluate mastery.

ChatGPT in Education: Effective when used with clear guidelines and focused on its strengths.

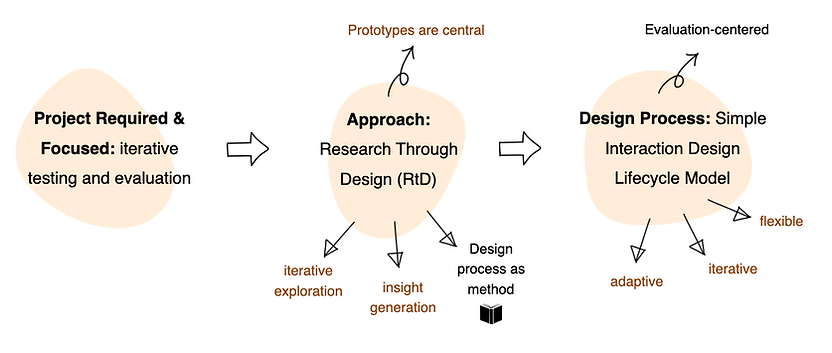

3. Design Process

To answer the research questions, this project required and focused on iterative testing and evaluation. Therefore, I used the Research through Design (RtD) approach, which allows iterative exploration and insights generation. Prototypes play a central role here. RtD uses the design process as a method to uncover knowledge.

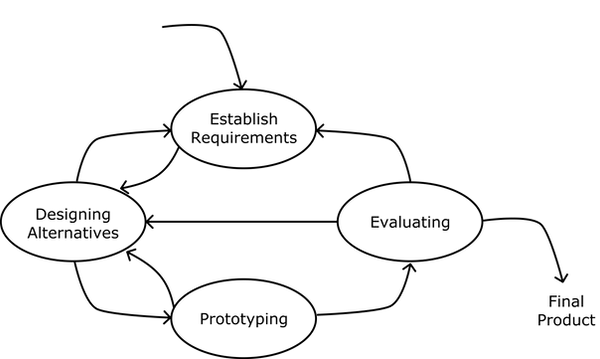

I used The Simple Interaction Design Lifecycle Model for the design process due to its adaptive, iterative, and flexible design approach, which was well suited for developing the study and its tools (prototypes).

Design Process - Lifecycle Model

-

Evaluate: I manually evaluated current LLMs through literature review, benchmark analysis, and expert insights—ultimately selecting ChatGPT-4 for its strengths.

-

Design Alternatives: I explored custom GPTs as an alternative, discovering they can be trained on specific subjects using prompt-based customization.

-

Establish Requirements: Using Revised Bloom’s Taxonomy, I defined learning objectives and user needs for conceptual learning, forming the functional requirements for the prototypes.

-

Prototyping: I built and refined prototypes by training GPTs with tailored prompts, using Bloom’s learning activities—iterating through trial and error to reach the desired quality.

Figure 1: Simple interaction design lifecycle model (Sharp, Rogers, & Preece, 2007).

Design Process - Prototyping

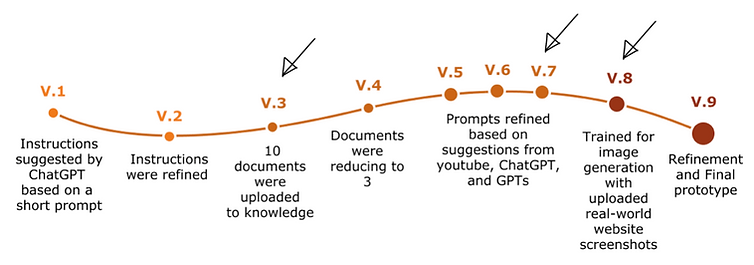

Prototyping GPTs was long and iterative. I had 9 prototypes.

I think the third, seventh, and eight are the most valuable stages.

Prototyping Custom GPTs

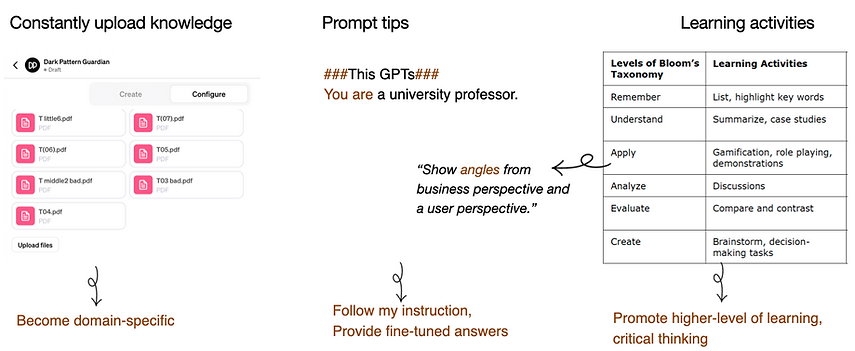

What made GPTs unique is that,

-

I consistently fed and uploaded credible information as "knowledge" to its training without overwhelming it.

So, it became domain-specific. -

I used prompting tips to make GPTs follow my instructions.

So GPTs provided more fine-tuned answers. -

I used learning activities from Bloom's Taxonomy for each level of learning and tailored them for dark patterns.

This promoted critical thinking.

Image Generation Progress of Custom GPTs

The biggest challenge was drawing a mental image without generating an image.

But constantly prompting and uploading ss from real websites worked somehow well in the end.

GPTs started to generate visuals that make sense.

4. Study - Methods

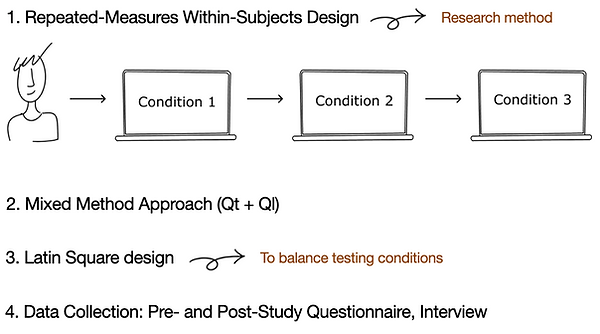

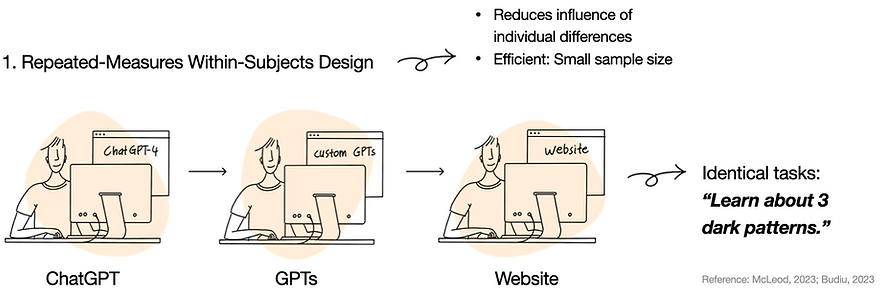

Why Repeated-Measures Within-Subjects Design?

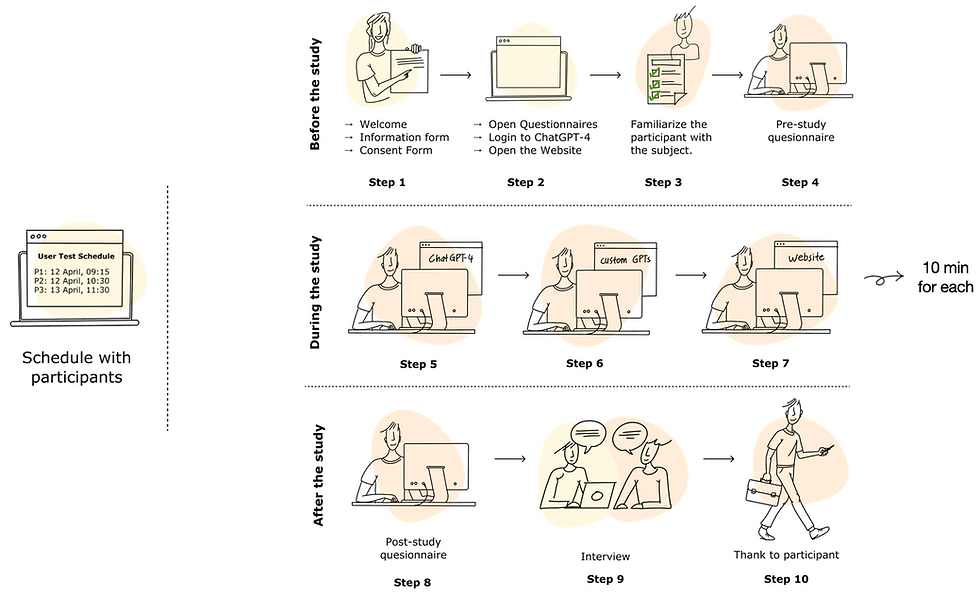

User Test Procedure

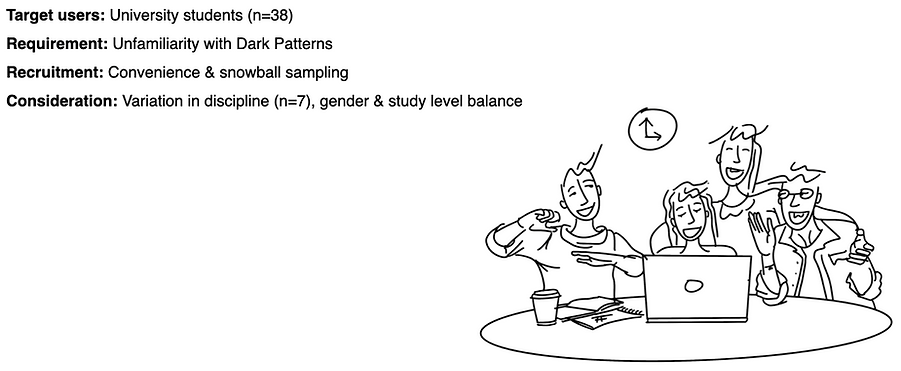

Participants:

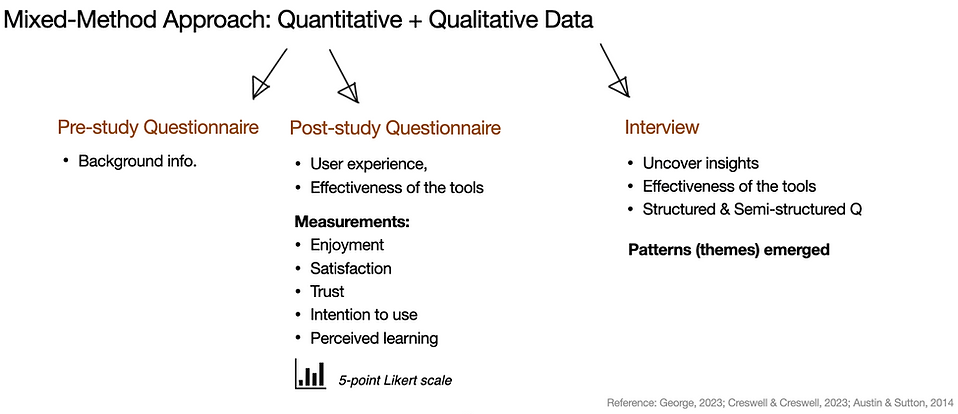

Data Collection & Measurements:

5. Results - Process of Analysis

Transforming extensive and complex data to clear, actionable, simple insights

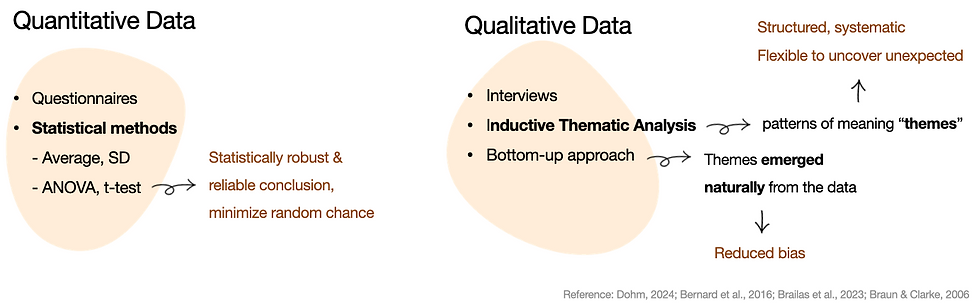

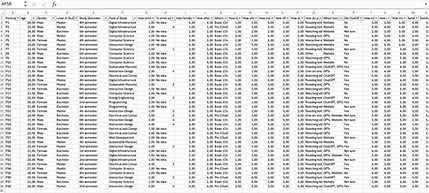

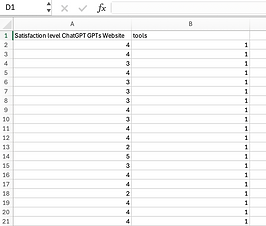

1. Transforming Quantitative Data

Quantitative data collected from pre- and post-study questionnaires.

Analysis methods: Statistical methods.

Tools: Excel, SPSS

Analysis Process

-

Exporting Data: Data was exported from Nettskjema (the online survey tool) in Microsoft Excel format.

-

Data Cleaning: Responses were checked to ensure there were no incomplete responses, and formatting was standardized for consistency (e.g., numeric values for Likert scales, proper labels for variables).

-

Data Organization: Columns were labeled to match questionnaire questions, and features like filtering were used for efficient sorting.

-

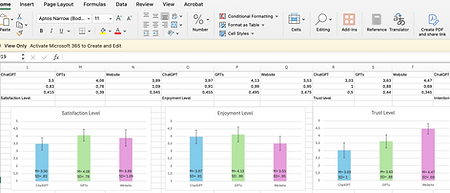

Data Summarization: Averages and standard deviations for Likert-scale questions were calculated. Trends were visualized with charts and graphs.

-

Inferential Analysis: Relationships between variables were examined using ANOVA and t-test, providing statistically robust and reliable conclusions while minimizing the likelihood of random chance influencing the results (Dohm, 2024).

-

Interpretation: Trends and significant differences were identified and linked to research objectives (e.g., determining the most preferred learning tool).

1 & 2. Row data to excesive data to analyse.

2, 3 & 4 Simplify the data, calculate Average & SD.

5. Organize the data to use for t-test

and ANOVA test. Read the data.

6. Read and interpret the data.

7. Generate Insight.

Key Insights from Quantitative Analysis

-

LLMs were more enjoyable and motivating learning tools compared to traditional websites.

-

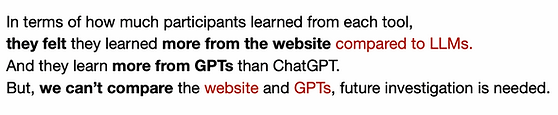

Custom GPTs outperformed ChatGPT in terms of satisfaction, perceived learning, and trustworthiness.

-

The website remained the most trusted source, with LLMs—especially ChatGPT—seen as less reliable due to their AI-generated nature.

-

While LLMs enhanced user experience, trust and information accuracy remained key concerns, limiting their perceived educational effectiveness.

2. Transforming Qualitative Data

-

Quantitative data collected from interviews.

-

Analysis methods: Inductive thematic analysis, bottom-up approach.

-

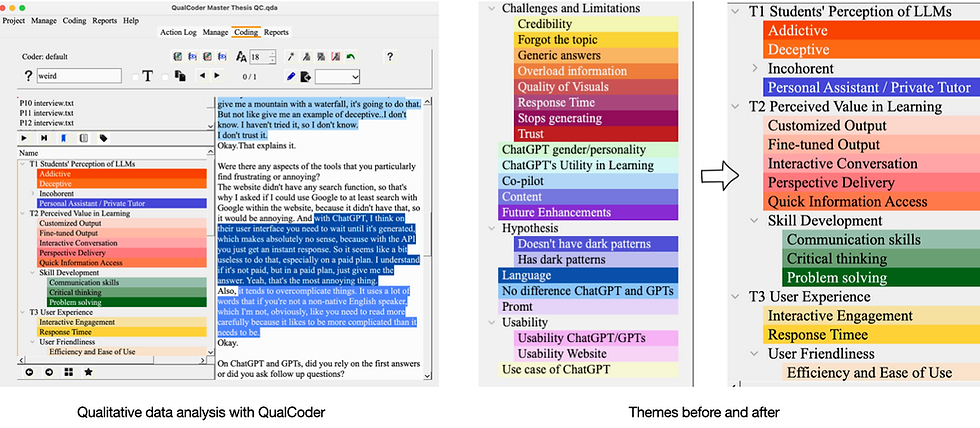

Tools: Nvivo, Autotekst, QualCoder

-

Process followed 6 phases of thematic analysis, summarized in 2 main parts.

Phase 1: Technical Setup and Transcription

-

Interviews were recorded using the Nettkjema Diktafon app, which encrypts and securely uploads files to Nettskjema, a Sikt-approved platform for confidential data storage.

-

The participant's recordings were transcribed into text using “Autotekst,” a speech-to-text tool powered by OpenAI’s Whisper V3 AI technology (Universitetet i Oslo, 2024).

-

Each text exported as plain text, and stored in a secure folder on researcher’s laptop.

-

Each text was manually reviewed to ensure quality and free of error before coding, the next step. This review process familiarized the researcher with the data and highlighted potential patterns for subsequent coding.

Phase 2-6: Coding and Analysis

-

Coding involves labeling important parts of the data to organize and identify meaningful patterns, which later form themes. Themes are groups of related codes that summarize the key ideas or patterns in the data (Braun & Clarke, 2006; Sutton & Austin, 2015).

-

The transcribed verbatim interview texts were separately uploaded to QualCoder, a userfriendly, open-source qualitative data analysis software (Brailas et al., 2023).

-

These texts were coded using the inductive thematic analysis method, with a bottom-up approach, meaning themes emerged naturally from the data.

-

The coding process began with marking significant phrases to create initial codes. Following Braun and Clarke’s guidelines, both explicit (semantic) and implicit meanings in the dialogue were considered during the coding process (Braun & Clarke, 2006).

-

Codes with similarities or relationships were grouped to form themes and subthemes (See Figure below). This process was iterative and cyclical, with themes refined and developed over time to ensure they reflected the data accurately.

Thematic Analysis

The interview transcripts revealed participants' experiences and diverse opinions on using LLMs (ChatGPT-4 and GPTs) and a website (traditional method) for learning a conceptual topic (dark patterns). From the data, consequently, 4 themes emerged.

Summary of Theme 1: Students’ Perception of LLMs

The first theme was characterized by how participants perceived LLMs while using them. Participants had mixed perceptions about LLMs; some viewed them negatively, while some positively.

Some viewed them negatively because of issues like loss of context, generic and shallow responses, bias from training data, verbosity, excessive and non-credible information, and a lack of transparency about data privacy. Others criticized ChatGPT’s overconfidence in its responses and its tendency to complicate simple answers. Additionally, some found ChatGPT’s ease of use, efficiency, and engaging nature potentially addictive, leading to overuse and reliance on the tool rather than seeking reliable sources. On the positive side, some participants appreciated LLMs as helpful tools, likening and describing ChatGPT as a personal assistant for daily tasks and custom GPTs as being a private tutor for their academic purposes.

Summary of Theme 2: Perceived Value in Learning

This theme focused on the value participants derived from using LLMs as learning tools. Overall, participants responded positively, appreciating the quick access to information, customized output, and interactive conversations. Some noted that using LLMs improved their questioning skills, as effective prompts were necessary to receive accurate answers for

ChatGPT. However, custom GPTs were often preferred over ChatGPT for learning in the educational context, offering greater value in providing new perspectives, fine-tuned responses, and fostering critical thinking and problem-solving skills.

Summary of Theme 3: User Experience

The following theme was characterized by participants’ experiences in the use of LLMs as learning tools. Participants generally shared positive experiences using LLMs, but they emphasized both positive and negative aspects. On the positive side, users appreciated the interactive, conversational nature of LLMs, which made learning engaging and personal. Quick response times, a streamlined interface, and features like chat-saving and file analysis further enhanced their experience. However, frustrations arose from the need for well-crafted prompts, limited flexibility for users preferring less interaction, and a lack of control over learning pace. Some also found the response time slow and distracting while waiting for answers to generate.

Summary of Theme 4: Limitations and Challenges

This theme explored the limitations of LLMs, and the challenges participants faced when using them for conceptual learning in educational contexts. Key challenges included crafting effective prompts, distinguishing accurate and credible information, dealing with the tool’s short-term memory issues, and concerns about data transparency and privacy. Participants also highlighted bias, outdated information, usage limits, limited guidance for new users, restricted accessibility and customization, and the lack of adaptation or personalization. Many of these issues stemmed from LLMs' technical and operational constraints, their nature as next-word predictors, or challenges with userfriendliness, creating barriers to a fully effective and seamless learning experience.

Key Insights from Qualitative Analysis

-

Participants valued LLMs for their conversational, engaging style, ease of use, and ability to deliver fast, tailored responses.

-

Custom GPTs were preferred for their domain-specific, teacher-like support, while ChatGPT was viewed as a generalist with issues like bias, verbosity, and loss of context.

-

Despite overall positive experiences, challenges included prompt dependence, lack of learning control, difficulty verifying information, limited memory, and concerns over data privacy, customization, and over-reliance on LLMs.

Conclusion

The study explored the use of ChatGPT and custom GPTs in helping students understand conceptual subjects, comparing them with a traditional educational website.

Results:

-

LLMs offered a more engaging, personalized, and motivating experience, with faster and easier information access than traditional methods. Custom GPTs, trained with domain knowledge and Bloom’s taxonomy, were especially effective.

-

Despite these strengths, concerns about information credibility, over-reliance, and prompt quality limited trust and learning outcomes, especially for foundational concepts.

-

Traditional websites remained the most trusted for learning from scratch, but custom trained GPTs showed strong potential to be trustworthy and complementary learning tools through interactive, adaptive learning when responsibly integrated into learning environments.

-

The study emphasized the need for AI literacy among students and educators, and called for collaboration between AI developers and educators to improve design, credibility, and integration in education.

Advocacy for Responsible Integration:

-

While not a replacement for human teachers, AI tools can enhance learning when used responsibly.

Future Directions:

-

Future studies should focus on improving the credibility, accuracy, accessibility, and usability of these tools to maximize their potential.

Contribution:

-

The study provides valuable insights into the educational potential of AI tools, particularly their ability to support conceptual learning and enrich student experience.